Boosting the JavaScript gRPC SDK with NaaE

TL;DR

- The Bottleneck. Node.js is single-threaded. While excellent for I/O,

@grpc/grpc-jsblocks the event loop during heavy protobuf deserialisation, triggering HTTP/2 backpressure and slowing down data ingestion. - The Fix. We implemented NAPI-as-an-Engine (NaaE), utilising Rust for connection management and hot paths while maintaining the native Node.js event emitter syntax.

- The Result. A 9,000% increase in data throughput without requiring partners to rewrite their codebase.

Node.js remains the dominant environment for many off-chain workloads, arbitrage bots, and indexers due to its massive ecosystem and ease of concurrency. However, on high-throughput chains like Solana, "ease of use" often comes with a hidden tax: the single-threaded event loop.

When you stream gigabytes of account updates, the default @grpc/grpc-js library forces your application to choose between processing data or receiving it. It cannot do both efficiently. The result is artificial latency – not from the network, but from the library itself choking on deserialisation.

By rebuilding the engine in Rust while keeping the shell in Typescript, we created a client that offers superior performance with the same usability. Here is how we broke the single-thread limit.

Why @grpc/grpc-js isn't good enough?

To understand the failure mode, you must look at the constraints of the default libraries.

The components and constraints of grpc-js

- Client SDK: The

@grpc/grpc-jsJavaScript SDK is a pure JavaScript implementation of the gRPC client. It provides the necessary APIs for using services defined by protocol buffers (protobuf) and manages the underlying HTTP/2 connection and stream state. - The single-thread bottleneck: Node.js's event loop operates on a single thread. While Node.js is excellent for concurrent I/O (handling many connections), all JavaScript execution, including protobuf deserialization and calling the user's data callback function, happens on this single thread.

HTTP/2 flow control and backpressure in gRPC

- gRPC/HTTP/2 flow control: gRPC, which is built on HTTP/2, uses a window-based flow control mechanism at both the stream level and the connection level. This is a critical feature designed to prevent a fast sender from overwhelming a slow receiver (known as backpressure).

The "window size" defines the amount of data (in bytes) the receiver is prepared to accept without explicitly granting more credit. - The internal pause/resume cycle: When a large volume of data is quickly pushed from the network I/O buffer to the Node.js process:

- The

@grpc/grpc-jslibrary's internal buffer fills up because the single JavaScript thread is busy deserialising protobufs and executing the user's callback function for the received messages. - As the buffer fills, the receiver's flow control window shrinks. When it hits zero, the gRPC layer internally pauses the stream by not sending a

WINDOW_UPDATEframe to the server. This is a deliberate backpressure signal. - The server is then halted from sending more data until it receives a new

WINDOW_UPDATEframe. - The stream resumes only after the JavaScript thread processes enough buffered messages to free up space, at which point the client sends a

WINDOW_UPDATEframe to "refill" the window and signal the server to continue.

How does NAPI solve the problem?

N-API enables our Typescript SDK to leverage the performance and ecosystem of Rust underneath a JS access point.

Imagine the power of Rust with the ease and accessibility of JavaScript? BONKERS!

This way, connection configuration and management can be handled in Rust, deprecating the use of grpc-js for gRPC.

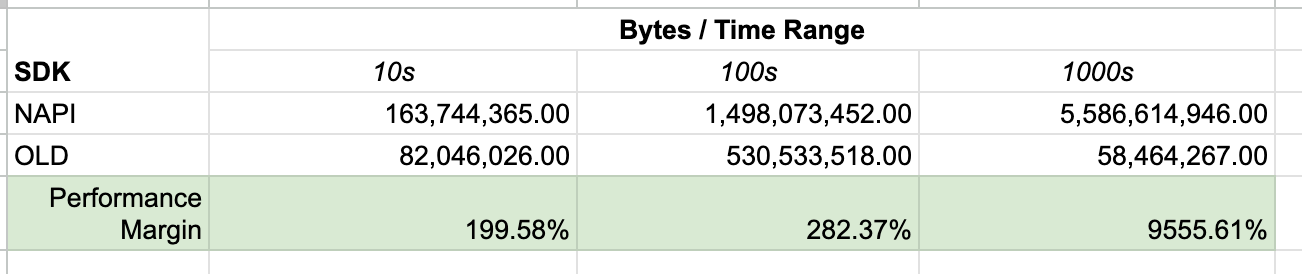

Performance comparison: napi versus grpc-js

Data Retrieved Per Unit of Time:

How we boosted our SDK while maintaining backwards compatibility using NaaE

After exploring different methods of interaction across Node and Rust, we have arrived at an optimal interaction between the two ecosystems that enables the following:

- Maximises compatibility with the greater Node ecosystem.

- Minimises boilerplate and glue code between Node and Rust.

- Maximises agile maintainability across high-performance teams by making changes and NAPI optimisations as decoupled as possible.

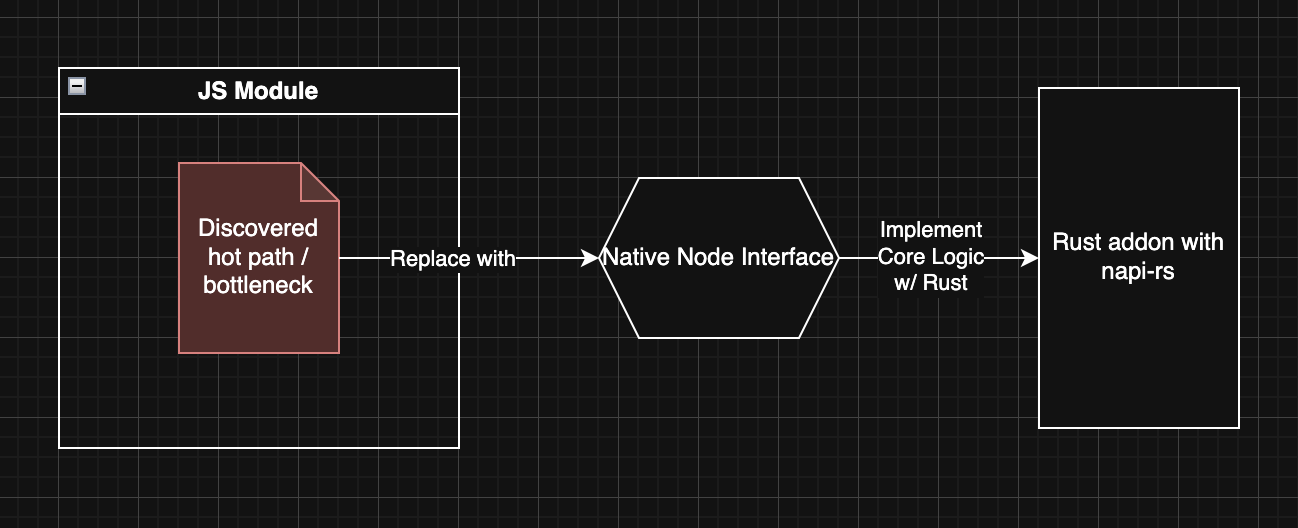

NAPI-as-an-Engine architecture

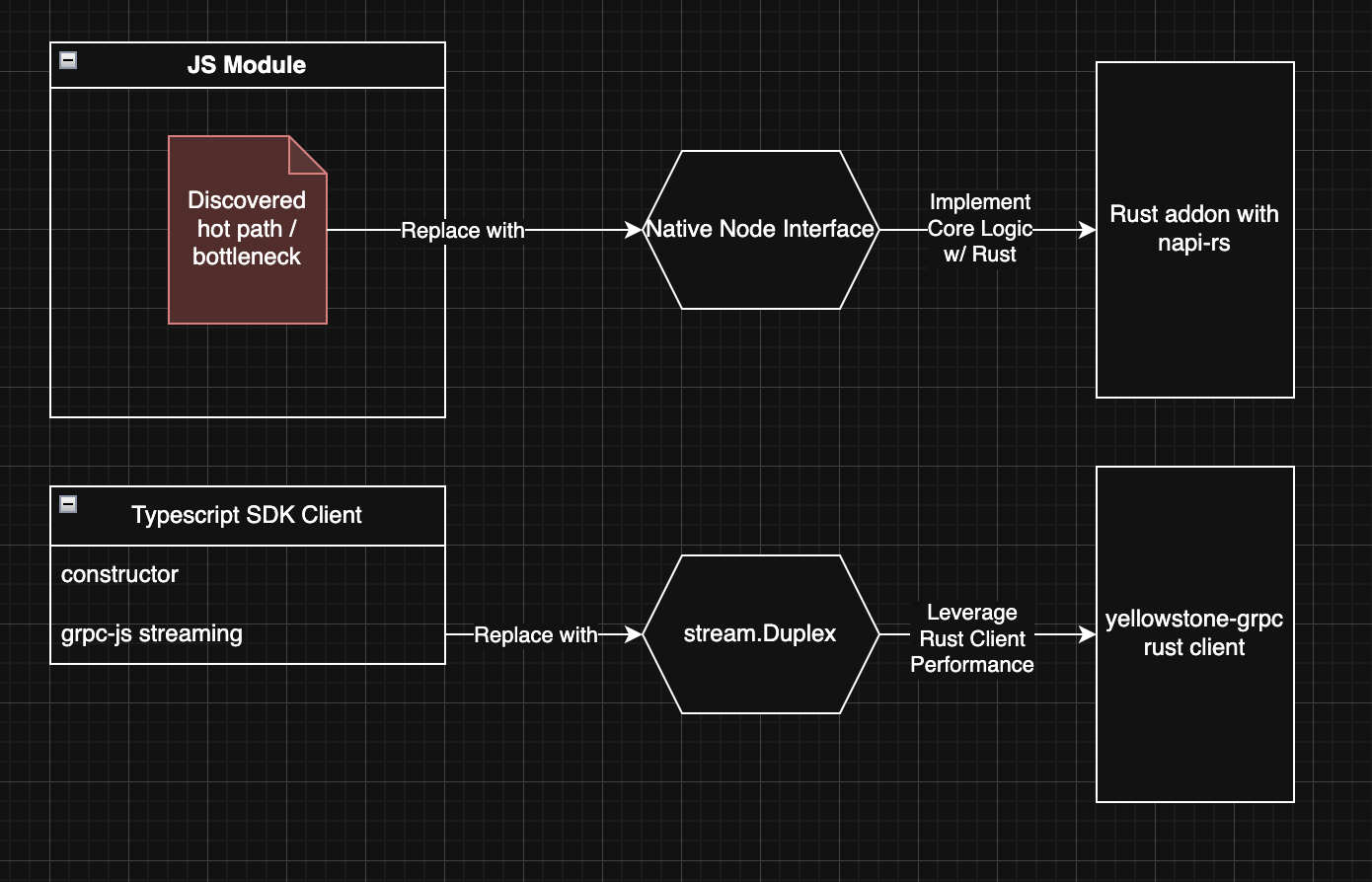

The core of NaaE is to deliberately optimise hot paths with Rust via napi-rs, while maintaining greater ecosystem compatibility in JS by utilising native interfaces as wrappers.

Implementation in the Typescript SDK

In our case, grpc-js was causing a slowdown on connection and runtime, bottlenecking the performance of our Typescript client.

We set out to improve this with the following key objectives:

- Maintain our previous client’s signatures to reduce migration friction as much as possible.

- Make the client performant by using Rust's asynchronous runtime.

- Make the code maintainable and easy to iterate on.

This was a heavy undertaking, given that performance and user experience rarely go together due to the nature of performance optimisations being a narrowing down of the solution domain, sacrificing generalizability, which leads to decreased user-friendliness.

However, with NaaE, maintaining user experience while squeezing out superior performance becomes possible.

For our TypeScript SDK, as an example, our main access point utilises Node’s standard event-driven pattern of emitter.on(“event”, callback) syntax.

Hence, our Rust implementation needed to adopt the same interfaces; otherwise, this would break our partners’ and clients’ previous code.

The trivial solution would be to mirror all expected interfaces in Rust; however, due to NAPI’s limitations, this will require a significant amount of code and will be difficult to maintain. The solution? NaaE.

Utilising Node’s native stream.Duplex() as a wrapper around our Rust Dragonsmouth gRPC client to replace the slow grpc-js connection management and runtime.

By doing so, the emitter.on(“event”, callback) syntax is maintained while the Rust implementation in napi-rs serves as the engine that enables our new SDK to be 9,000% faster than the old one.

Benchmarks and verification

For our users, getting all the data as fast as possible is what matters. To measure that, we subscribe to all account updates using the NAPI implementation and the older SDK, collect the responses over the same fixed testing window, and compare the total output size. The NAPI implementation consistently returns more data within the same window, which is why it delivers higher throughput.

Getting started with the new SDK

The new SDK code is open-source and available in the public repo. A package will soon be published on npm.

For your code, just bump the version number, and it'll work. No code changes are required, and the API has no breaking changes.

If anything breaks, please open an issue here.